The Times Tech Summit 2024 Key Takeaways

Now in its third year, The Times and The Sunday Times Tech Summit returned to London this week, bringing together the UK’s foremost senior innovation experts and tech leaders. Set in a year of substantial change for politics, technology and society, the summit looked at how AI is transforming businesses (spoiler: quite a lot). There was a full day of discussions and panels with engaging expert speakers, including Amadeus Partner, Amelia Armour.

The discussions tackled issues like whether AI will reach a human level of intelligence, or Artificial General Intelligence (AGI) as it was referred to throughout, and how AI can be regulated. A highlight from the day was the panel discussion on the investor’s perspective, asking whether the UK can become a tech giant. Read on for a full breakdown of the day.

The UK Government’s perspective on AI

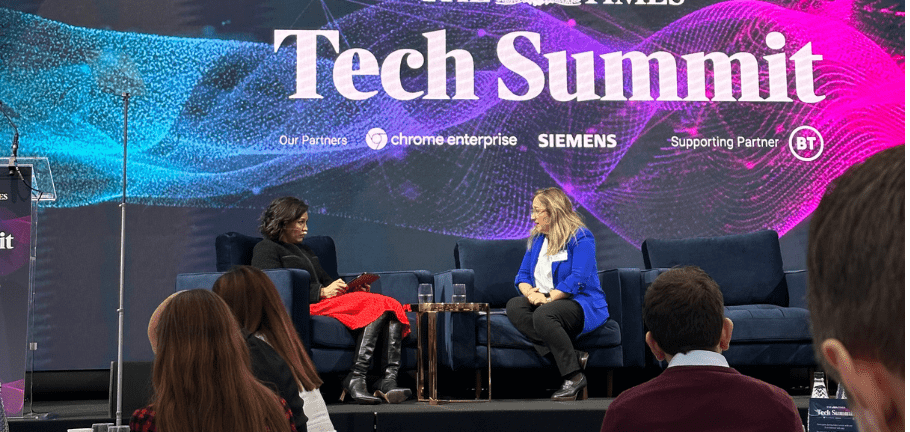

Opening the summit was a discussion between Katie Prescott, Technology Business Editor at The Times, and Feryal Clark, Parliamentary Under-Secretary of State for AI and Digital Government. With a new UK Labour Government in place, legislation or policy changes around AI has featured regularly in the press, so it wasn’t surprising that this featured in their discussion. Here’s some key points from the session:

- The AI opportunities plan which is being led by Matt Clifford is getting support.

- There’s a focus on improving public services through the use of AI.

- Addressing the friction over copyright between the creative and AI industries is a top priority, but it is proving difficult to navigate.

- Legislation or change to policy around AI is expected before the end of the year.

While the session posed more questions than answers, it’s good to see the Government taking an active role in events like this to hear perspectives from leaders in AI before making policy decisions.

Artificial General Intelligence or AGI

Since ChatGPT’s release in November 2022, AI has captured the attention of the public. The discussions around it are usually the same, opportunities and risks. It was much the same at the Times Tech Summit, but with a focus on Artificial General Intelligence or AGI.

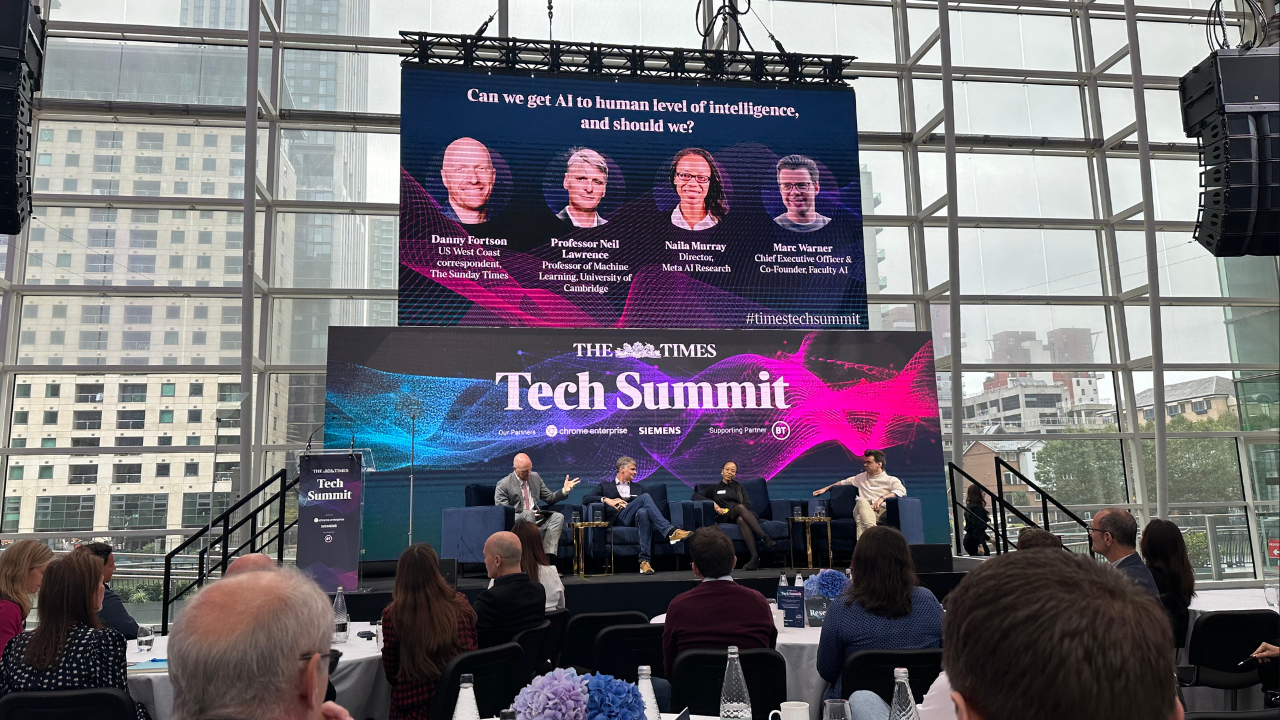

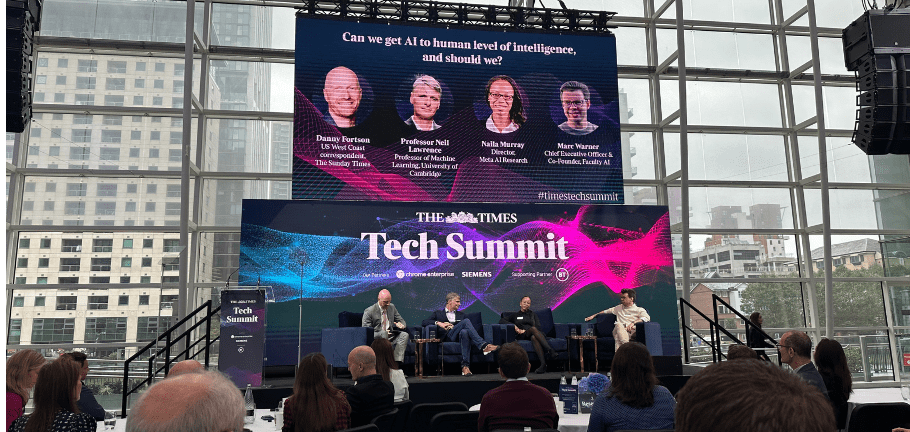

A panel moderated by Danny Fortson (US West Coast Correspondent) and featuring Professor Neil Lawrence (Professor of Machine Learning, Cambridge University), Naila Murray (Director, Meta AI Research), and Marc Warner (CEO and co-founder, Faculty AI) tackled it head on.

“Can we get AI to human level intelligence, and should we?”, was the title of the panel. While there was some general agreement on the risks posed, most members of the panel pointed out that the right questions aren’t being asked. Here’s a digest:

- Achieving AGI means solving problems which are unbounded and training a model on more data than even Large Language Models were trained, which requires substantial investment and energy.

- Professor Neil Lawrence stressed that it’s dangerous for the UK Government to think that AI or AGI could replace public services.

- There’s a need for research on understanding how humans can work with AI, with Marc Warner using the example that if you were to build a computer, you include a screen, mouse and keyboard for humans to interact with it. There’s no need for any of this if you don’t build it for humans to interact with.

- Meta is making its AI opensource as Naila Murray points out the importance of allowing people to tinker with the tech and find emerging capabilities in its foundational models.

- There’s a lot of time and energy being spent worrying about whether AI will take peoples jobs, rather than seeing the potential for how it can improve existing ones.

The investor’s perspective on UK innovation and AI

Understanding UK innovation and the relevance of AI in that conversation doesn’t require a crystal ball. This is where the perspective of investors in the area can give an overview of the direction of travel. If you follow the money, you’ll see where the innovation lies.

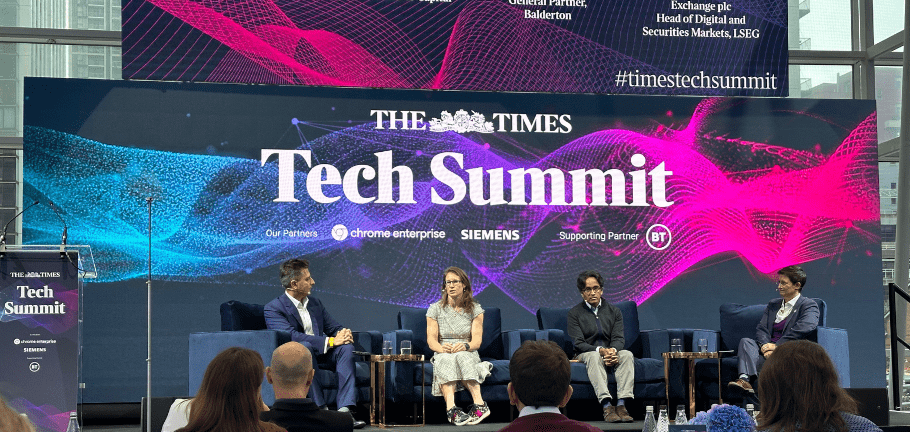

Oliver Shah (Associate Editor, The Sunday Times) moderated a discussion between Amelia Armour (Partner at Amadeus), Suranga Chandratillake (General Partner, Balderton) and Dame Julia Hoggett (CEO of London Stock Exchange) on this topic. Here’s what they had to say:

- Some investors — like Amadeus — have changed approach over the past few years as companies have emerged in the wake of LLMs and GenAI. There’s generally less intellectual property when products or services are created on top of these models.

- Understanding where true innovation lies amongst the plethora of AI companies that have emerged is challenging. Those that solve real problems and provide a solution for existing or future big markets will be able to fundraise. An example being Amadeus-backed AI call centre automation company, PolyAI.

- There needs to be more support for truly innovative companies from the UK. Suranga Chandratillake shared the success story of Balderton-backed Revolut, as the company struggled to raise at an early stage in Europe, relying on the US and Asia for most of its funding.

- Pension funds in the UK could provide a much-needed boost to fundraising in the private market, with the potential for greater returns. The example of US VC funds being over 60% (72% currently) funded by US pensions was provided by Amelia Armour on this point.

How businesses can stay secure

There was healthy debate throughout the summit on the risks posed by AI, with one panel covering “Rogue AI”. Ian McCormack, Deputy CTO at the National Cyber Security Centre (NCSC), covered illegal solutions like Ransomware-as-a-service. These services, mirroring the business models of those that stay on the right side of the law, have popped up in the wake of GenAI adoption. Threat actors are also using GenAI to make phishing campaigns more credible.

The true scale of the impact of these cyber-attacks on businesses is unknown, as some simply pay the hackers and hope it disappears. Ian mentioned that the NCSC is focused on creating additional measures to ensure that businesses do report these attacks. Not all choose to stay silent though, as demonstrated by the transparency of the British Library following an attack by an affiliate of the Rhysida ransomware-as-a-service gang in 2023. 600GB of data was stolen in the attack, including details of users, which was leaked.

In another panel on security, Katie Prescott spoke with Mike Beck (Global CISO, Darktrace), Etienne De Burgh (Senior Security & Compliance Specialist, Google Cloud CISO), and Howard Watson (Chief Security & Networks Officer, BT Group). These experts suggested that while awareness of these threats help, there needs to be a solid cybersecurity bill with a specific set of outcomes delivered by the government.

What the future holds

Concerns about the loss of jobs to AI are still front of mind for many, but the outlook was positive from those at the summit. Carl Ennis, CEO of Siemens UK & Ireland, was keen to point out that if people adopt AI in their job, it need not be a threat. This is without mentioning the jobs created by the innovative companies in AI. Professor Neil Lawrence also noted the transformational quality that GenAI has brought to how humans interact with technology, as it allows normal people to speak with machines directly, unmediated by software engineers.

Regulation of AI is feared by many as a threat to UK innovation in the area. The view Melanie Davies, Chief Executive at OFCOM, shared is that regulation would not be on the technology itself but the risks that arise from how it’s used. OFCOM typically looks at illegal harms, such as those covered in the Online Safety Act released in 2023. While it’s not yet clear what direction the current UK Government will take on this, it’s a positive sign to see discussions happening at events like this.

The UK has always been a beacon for technology, from the work of Alan Turing to today’s cutting-edge advancements in AI. While the future is uncertain, that doesn’t stop innovation, it’s usually quite the opposite.